A Closer Look at the Many Facets of Diffusion Models

Diffusion models are a powerful new type of generative model that has the potential to revolutionize many different fields, such as image generation, text generation, and music generation.

Imagine you are a painter who wants to create a realistic painting of a cat. You could start by sketching the cat's basic shape, and then gradually add more details, such as the fur, eyes, and nose. This is essentially the same approach that diffusion models use to generate images.

Or you are a game developer who wants to create a new game with realistic graphics. You could use diffusion models to generate the game's assets, such as characters, objects, and environments. This would allow you to create high-quality graphics without having to spend a lot of time and money on manual art creation.

Another example is a company that wants to create a new marketing campaign. They could use diffusion models to generate realistic images of their products or services. This would allow them to create more engaging and persuasive marketing materials.

Diffusion models have the potential to revolutionize the way we create and interact with digital content. They are a powerful new tool that can be used to create realistic and engaging images, videos, and other media.

Let's dive into more details. The rest of the article has been categorized in below sections.

- Introduction

- History

- Types of Diffusion Models

- Why Use Diffusion Models?

- How Do Diffusion Models Work?

- Examples of Diffusion Models

- Comparison with Other Generative Models

- Conclusion

Introduction

Diffusion models are a type of generative machine learning model that works by starting with a noisy image and then gradually removing the noise to reveal a realistic image. This is done by repeatedly applying a diffusion process, which is a mathematical operation that adds noise to an image.

History

Chronological history of diffusion models:

- 2015: Sohl-Dickstein et al. introduce diffusion probabilistic models (DPMs) as a method to learn a model that can sample from a highly complex probability distribution.

- 2017: Song et al. propose the score-based generative modeling framework, which includes noise-conditioned score networks (NCSNs). NCSNs can be used to train diffusion models for both text and image generation.

- 2020: Ho et al. introduce denoising diffusion probabilistic models (DDPMs), which are easier to train than previous diffusion models and achieve state-of-the-art results on image generation tasks.

- 2021: DDPMs are extended to support conditional generation, allowing users to control the outputs of the model.

- 2022: Diffusion models are used to achieve new state-of-the-art results on a variety of tasks, including text generation, image generation, and 3D modeling.

Types of Diffusion Models

There are two main types of diffusion models:

- Denoising diffusion models: These models start with a noisy image and then learn to remove the noise to reveal a clean image.

- Generative diffusion models: These models start with a random noise image and then learn to generate realistic images by gradually adding noise and then removing it.

Why Use Diffusion Models?

Diffusion models have several advantages over other types of generative models, such as:

- They can generate high-quality images with sharp details.

- They are relatively easy to train.

- They can be used to generate images from a variety of different domains, such as images, text, and music.

How Do Diffusion Models Work?

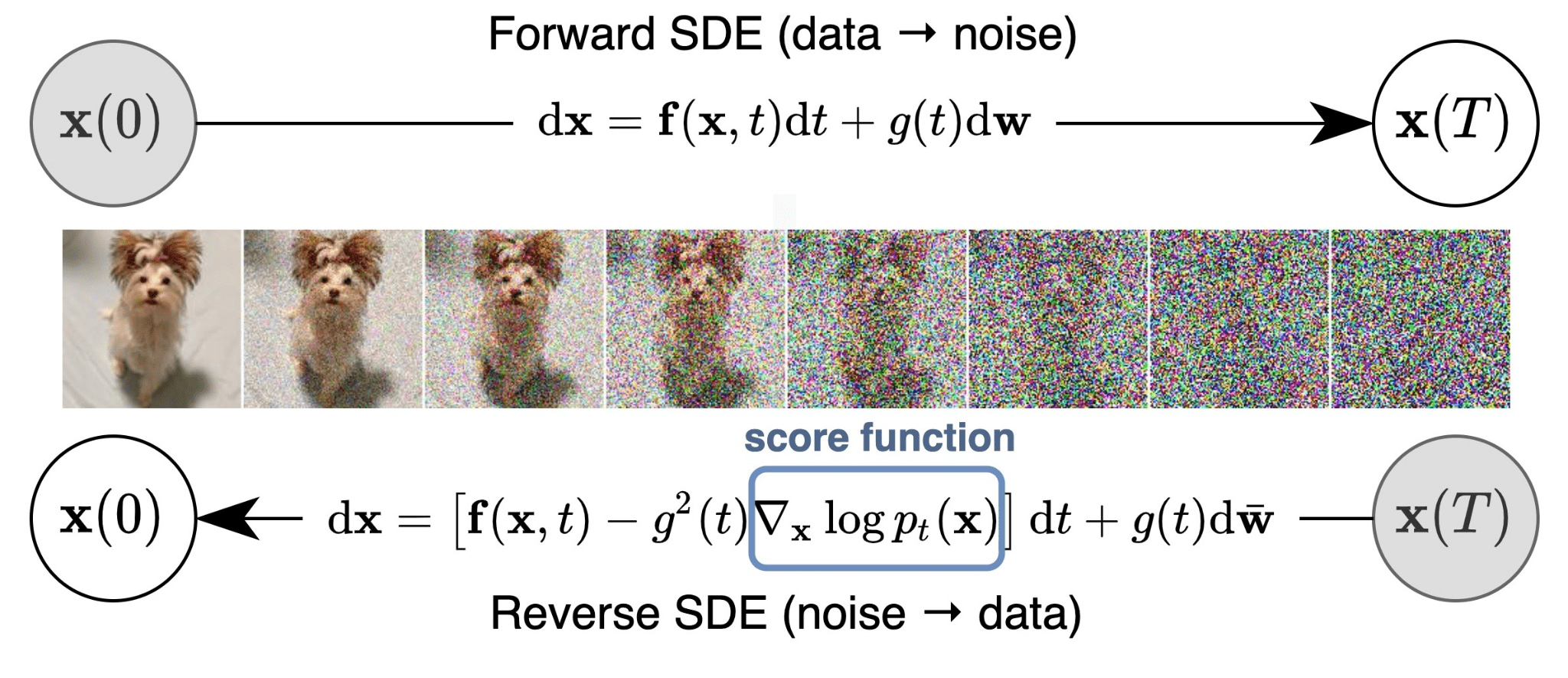

Diffusion models work by repeatedly applying a diffusion process to an image. The diffusion process is a mathematical operation that adds noise to an image. The amount of noise that is added at each step is determined by a parameter called the diffusion step size.

The diffusion process is applied repeatedly, starting with a noisy image and ending with a clean image. At each step, the model learns to remove the noise from the image. This is done by minimizing a loss function, which measures the difference between the original image and the denoised image.

Let's dive into code

To understand how diffusion models work, it is helpful to first understand the concept of diffusion. Diffusion is a physical process in which particles move from an area of high concentration to an area of low concentration. For example, if you drop a drop of food coloring into a glass of water, the food coloring will eventually diffuse throughout the water until it is evenly distributed.

Diffusion models work in a similar way, but instead of particles, they are diffusing noise. The diffusion process can be represented mathematically as follows:

where:

x{t}is the data sample at time steptx{t-1}is the data sample at time stept-1σis the noise levelN(0, 1)is a standard normal distribution

To generate a new data sample, a diffusion model first starts with a random noise sample. It then gradually removes the noise by applying the reverse diffusion process:

The model continues to remove noise until it reaches the desired level of cleanliness.

Diffusion models are trained using a variational inference algorithm. The goal of the training process is to learn a model that can accurately reverse the diffusion process. The model is trained by providing it with a set of clean data samples and noisy data samples. The model then learns to predict the clean data sample from the noisy data sample.

Once the model is trained, it can be used to generate new data samples by starting with a random noise sample and then applying the reverse diffusion process.

Here is a simple Python code example of a diffusion model:

import numpy as np

class DiffusionModel:

def __init__(self, sigma):

self.sigma = sigma

def forward(self, x):

noise = np.random.normal(0, self.sigma, size=x.shape)

x_noisy = x + noise

return x_noisy

def reverse(self, x_noisy):

x = x_noisy - self.sigma * np.random.normal(0, 1, size=x_noisy.shape)

return x

# Create a diffusion model

model = DiffusionModel(sigma=0.1)

# Generate a new data sample

x_noisy = model.forward(np.zeros((256, 256, 3)))

x = model.reverse(x_noisy)

# Display the generated data sample

import matplotlib.pyplot as plt

plt.imshow(x)

plt.show()

This code example will generate a random noise image and then use the diffusion model to remove the noise. The resulting image will be a clean image that is similar to the data that the model was trained on. Something like below.

Examples of Diffusion Models

Some popular diffusion models include:

- Denoising Diffusion Probabilistic Models (DDPM): This is one of the most popular diffusion models. It was proposed by Ho et al. in 2020.

- Progressive Growing of GANs (PGAN): This is another popular diffusion model. It was proposed by Karras et al. in 2017.

- BigGAN: This is a diffusion model that was trained on a massive dataset of images. It was proposed by Brock et al. in 2021.

- DALL-E 2 (2021): A text-to-image diffusion model that can generate realistic and high-quality images from text descriptions.

- Imagen (2022): A text-to-image diffusion model that can generate images with higher photorealism and detail than previous models.

- GLIDE (2022): A text-to-image diffusion model that can generate images at high speeds, making it more suitable for real-time applications.

- Parti (2022): A diffusion model for 3D point cloud generation.

- NerfDiffusion (2022): A diffusion model for 3D scene generation.

There are hundreds of diffusion models out there (if not thousands) and they are growing on a daily basis.

Comparison with Other Generative Models

Diffusion models are similar to other generative models, such as variational autoencoders (VAEs) and generative adversarial networks (GANs). However, there are some key differences between diffusion models and these other models.

One difference is that diffusion models are typically easier to train than VAEs and GANs. This is because diffusion models do not require a generative model to be trained in tandem with a discriminative model.

Another difference is that diffusion models can generate higher-quality images than VAEs and GANs. This is because diffusion models do not suffer from the mode collapse problem, which is a problem that can occur in VAEs and GANs.

Conclusion

Diffusion models are a powerful tool for generating realistic images. They are relatively easy to train and can be used to generate images from a variety of different domains. Diffusion models are a promising new approach to generative modeling, and they are likely to be used in a variety of applications in the future.

I hope this article has given you a good understanding of diffusion models. If you enjoyed the article do consider checking more.